Without question, the world of cinema has offered audiences an abundant collection of memorable villains. From the chilling menace of Hannibal Lecter to the sinister allure of Darth Vader, the villainous characters have typically been what fuel the captivating dynamics of many films. However, few have epitomized raw physical intimidation and unforgettable menace quite like Bolo Yeung, whose contributions to the villain archetype have undeniably cemented his position as one of the most imposing movie villains of all time.

Bolo Yeung, born Yang Sze, is an iconic figure hailing from the world of martial arts cinema. He entered the film industry with a background in competitive bodybuilding, and his gargantuan physique immediately set him apart. However, it was his charismatic screen presence and inherent ability to embody villainy that truly distinguished him.

Undeniably, Yeung’s breakthrough role as the brutal “Bolo” in Bruce Lee’s “Enter the Dragon” was his ticket to infamy. In this film, Yeung’s ruthless character became the personification of the brutal, unyielding antagonist. His fearsome appearance, underpinned by rippling muscles and a piercing gaze, was matched only by his character’s relentless savagery. Bolo didn’t just intimidate – he terrorized, a force of nature that was as brutal as it was unstoppable.

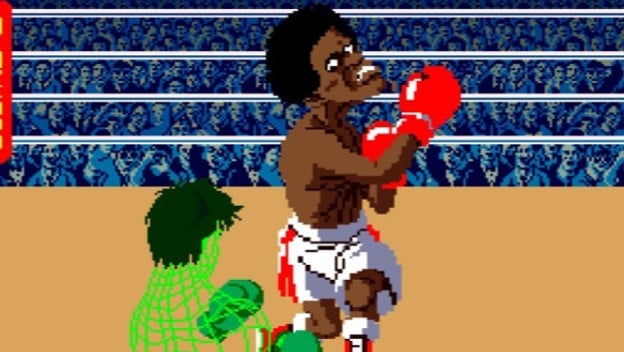

Bolo Yeung continued to etch his legacy in the annals of cinematic villainy in “Bloodsport” opposite Jean-Claude Van Damme. Here, as the monstrous Chong Li, Yeung left an indelible impression with his terrifying physicality and villainous aura. Chong Li was not just an opponent in the Kumite, but a force that embodied the very essence of antagonism – lethal, remorseless, and terrifyingly powerful. Yeung’s performance took what could have been a generic tough guy and turned him into a character as memorable as the film’s protagonist.

What makes Bolo Yeung such an iconic villain, though, extends beyond his hulking frame and martial arts prowess. There’s a certain depth in his performance that’s often overlooked. Behind the frightening exterior of his characters, Yeung often conveys an internal complexity, an intelligence that’s every bit as threatening as his physical strength. This sinister sophistication marks his villains as not merely physical threats, but psychological ones as well.

Indeed, Bolo Yeung has, throughout his career, excelled in roles that require not just brute force, but also a distinctive brand of malice and ruthlessness. He’s able to make the audience feel uneasy with a mere glance, a quiet word, or a sinister smile – no mean feat for an actor whose physical presence is so overwhelmingly powerful.

Yet, despite his imposing screen presence and his villainous roles, Bolo Yeung off-screen is described by many as gentle, humble, and exceptionally disciplined. This paradox further underscores the brilliance of his acting, transforming from a gentle giant into an embodiment of fear and terror once the cameras start rolling.

In conclusion, Bolo Yeung’s name deserves to be mentioned alongside the pantheon of great cinema villains. Through his exceptional physical presence, his charismatic performances, and the depth he brings to his characters, Yeung has forged a unique path in film history. He has given us villains that are more than just adversaries for the hero – they’re unforgettable characters that continue to resonate long after the credits roll. Bolo Yeung, a colossus of cinema villainy, has proven time and again that a villain’s role is not merely to be defeated, but to leave an indelible imprint on the narrative and the audience’s mind. That’s the mark of a truly great antagonist, and Bolo Yeung delivers it like no other.

This blogpost was created with help from ChatGPT Pro