As I head to the National for the first time, this is a topic I have been thinking about for quite some time, and a recent video inspired me to put this together with help from ChatGPT’s o3 model doing deep research. Enjoy!

Introduction: Grading Under the Microscope

Sports card grading is the backbone of the collectibles hobby – a PSA 10 vs PSA 9 on the same card can mean thousands of dollars of difference in value. Yet the process behind those grades has remained stubbornly old-fashioned, relying on human eyes and judgment. In an age of artificial intelligence and computer vision, many are asking: why hasn’t this industry embraced technology for more consistent, transparent results? The sports card grading industry is booming (PSA alone graded 13.5 million items in 2023, commanding ~78% of the market), but its grading methods have seen little modernization. It’s a system well overdue for a shakeup – and AI might be the perfect solution.

The Human Element: Trusted but Inconsistent

For over 30 years, Professional Sports Authenticator (PSA) has set the standard in grading, building a reputation for expertise and consistency . Many collectors trust PSA’s human graders to spot subtle defects and assess a card’s overall appeal in ways a machine allegedly cannot. This trust and track record are why PSA-graded cards often sell for more than those graded by newer, tech-driven companies. Human graders can apply nuanced judgment – understanding vintage card print idiosyncrasies, knowing how an odd factory cut might affect eye appeal, etc. – which some hobbyists still value.

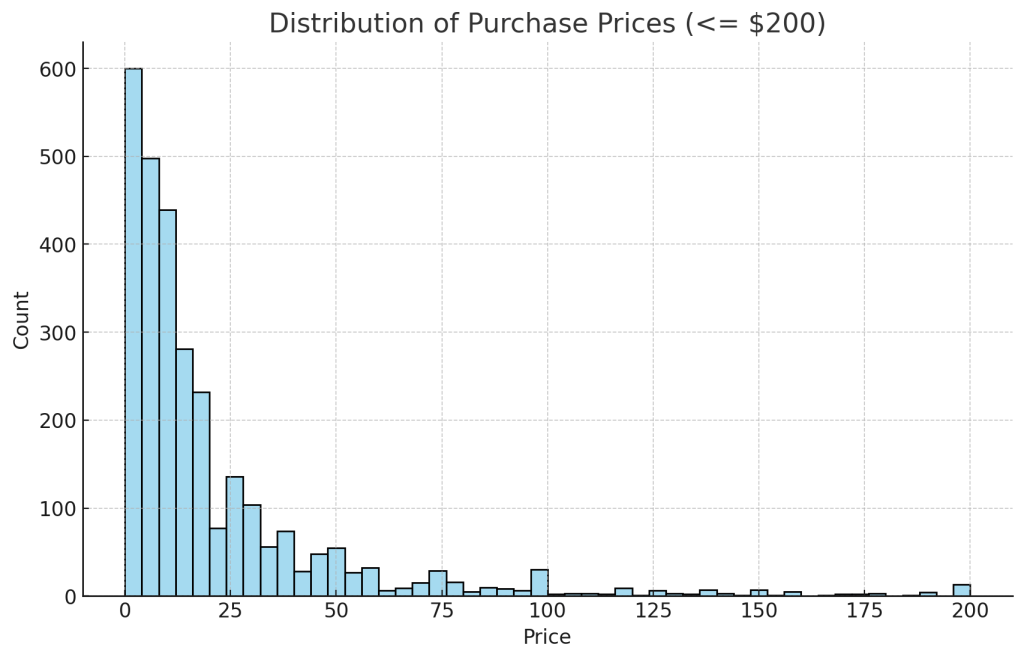

However, the human touch has undeniable downsides. Grading is inherently subjective: two experienced graders might assign different scores to the same card. Mood, fatigue, or unconscious bias can creep in. And the job is essentially a high-volume, low-wage one, meaning even diligent graders face burnout and mistakes in a deluge of submissions. Over the pandemic boom, PSA was receiving over 500,000 cards per week, leading to a backlog of 12+ million cards by early 2021. They had to suspend submissions for months and hire 1,200 new employees to catch up. Relying purely on human labor proved to be a bottleneck – an expensive, slow, and error-prone way to scale. Inconsistencies inevitably arise under such strain, frustrating collectors who crack cards out of their slabs and resubmit them hoping for a higher grade on a luckier day. This “grading lottery” is accepted as part of the hobby, but it shouldn’t be.

Anecdotes of inconsistency abound: Collectors tell stories of a card graded PSA 7 on one submission coming back PSA 8 on another, or vice versa. One hobbyist recounts cracking a high-grade vintage card to try his luck again – only to have it come back with an even lower grade, and eventually marked as “trimmed” by a different company. While such tales may be outliers statistically, they underscore a core point: human grading isn’t perfectly reproducible. As one vintage card expert put it, in a high-volume environment “mistakes every which way will happen” . The lack of consistency not only erodes collector confidence but actively incentivizes wasteful behavior like repeated resubmissions.

Published Standards, Unpredictable Results

What’s ironic is that the major grading companies publish clear grading standards. PSA’s own guide, for instance, specifies that a Gem Mint 10 card must be centered 55/45 or better on the front (no worse than 60/40 for a Mint 9), with only minor flaws like a tiny print spot allowed. Those are numeric thresholds that a computer can measure with pixel precision. Attributes like corner sharpness, edge chipping, and surface gloss might seem more subjective, but they can be quantified too – e.g. by analyzing images for wear patterns or gloss variance. In other words, the criteria for grading a card are largely structured and known.

If an AI system knows that a certain scratch or centering offset knocks a card down to a 9, it will apply that rule uniformly every time. A human, by contrast, might overlook a faint scratch at 5pm on a Friday or be slightly lenient on centering for a popular rookie card. The unpredictability of human grading has real consequences: collectors sometimes play “submitter roulette,” hoping their card catches a grader on a generous day. This unpredictability is so entrenched that an entire subculture of cracking and resubmitting cards exists, attempting to turn PSA 9s into PSA 10s through persistence. It’s a wasteful practice that skews population reports and costs collectors money on extra fees – one that could be curbed if grading outcomes were consistent and repeatable.

A Hobby Tailor-Made for AI

Trading cards are an ideal use-case for AI and computer vision. Unlike, say, comic books or magazines (which have dozens of pages, staples, and complex wear patterns to evaluate), a sports card is a simple, two-sided object of standard size. Grading essentially boils down to assessing four sub-criteria – centering, corners, edges, surface – according to well-defined guidelines. This is exactly the kind of structured visual task that advanced imaging systems excel at. Modern AI can scan a high-resolution image of a card and detect microscopic flaws in an instant. Machine vision doesn’t get tired or biased; it will measure a border centering as 62/38 every time, without rounding up to “approximately 60/40” out of sympathy.

In fact, several companies have proven that the technology is ready. TAG Grading (Technical Authentication & Grading) uses a multi-patented computer vision system to grade cards on a 1,000-point scale that maps to the 1–10 spectrum. Every TAG slab comes with a digital report pinpointing every defect, and the company boldly touts “unrivaled accuracy and consistency” in grading. Similarly, Arena Club (co-founded by Derek Jeter) launched in 2022 promising AI-assisted grading to remove human error. Arena Club’s system scans each card and produces four sub-grades plus an overall grade, with a detailed report of flaws. “You can clearly see why you got your grade,” says Arena’s CTO, highlighting that AI makes grading consistent across different cards and doesn’t depend on the grader. In other words, the same card should always get the same grade – the ultimate goal of any grading process.

Even PSA itself has dabbled in this arena. In early 2021, PSA acquired Genamint Inc., a tech startup focused on automated card diagnostics. The idea was to integrate computer vision that could measure centering, detect surface issues or alterations, and even “fingerprint” each card to track if the same item gets resubmitted. PSA’s leadership acknowledged that bringing in technology would allow them to grade more cards faster while improving accuracy. Notably, one benefit of Genamint’s card fingerprinting is deterring the crack-and-resubmit cycle by recognizing cards that have been graded before. (One can’t help but wonder if eliminating resubmissions – and the extra fees they generate – was truly in PSA’s financial interest, which might explain why this fingerprinting feature isn’t visibly advertised to collectors.)

The point is: AI isn’t some far-off fantasy for card grading – it’s here. Multiple firms have developed working systems that scan cards, apply the known grading criteria, and produce a result with blinding speed and precision. A newly launched outfit, Zeagley Grading, showcased in 2025 a fully automated AI grading platform that checks “thousands of high-resolution checkpoints” on each card’s surface, corners, and edges. Zeagley provides a QR-coded digital report with every slab explaining exactly how the grade was determined, bringing transparency to an area long criticized for its opacity. The system is so confident in its consistency that they’ve offered a public bounty: crack a Zeagley-slabbed card and resubmit it – if it doesn’t come back with the exact same grade, they’ll pay you $1,000. That is the kind of repeatability collectors dream of. It might sound revolutionary, but as Zeagley’s founders themselves put it, “What we’re doing now isn’t groundbreaking at all – it’s what’s coming next that is.” In truth, grading a piece of glossy cardboard with a machine should be straightforward in 2025. We have the tech – it’s the will to use it that’s lagging.

Why the Slow Adoption? (Ulterior Motives?)

If AI grading is so great, why haven’t the big players fully embraced it? The resistance comes from a mix of practical and perhaps self-serving reasons. On the practical side, companies like PSA and Beckett have decades of graded cards in circulation. A sudden shift to machine-grading could introduce slight changes in standards – for example, the AI might technically grade tougher on centering or surface than some human graders have historically. This raises a thorny question: would yesterday’s PSA 10 still be a PSA 10 under a new automated system? The major graders are understandably cautious about undermining the consistency (or at least continuity) of their past population reports. PSA’s leadership has repeatedly stated that their goal is to assist human graders with technology, not replace them. They likely foresee a gradual integration where AI catches the easy stuff – measuring centering, flagging obvious print lines or dents – and humans still make the final judgment calls, keeping a “human touch” in the loop.

But there’s also a more cynical view in hobby circles: the status quo is just too profitable. PSA today is bigger and more powerful than ever – flush with record revenue from the grading boom and enjoying market dominance (grading nearly 4 out of every 5 cards in the hobby ). The lack of consistency in human grading actually drives more business for them. Think about it: if every card got a perfectly objective grade, once and for all, collectors would have little reason to ever resubmit a card or chase a higher grade. The reality today is very different. Many collectors will crack out a PSA 9 and roll the dice again, essentially paying PSA twice (or more) for grading the same card, hoping for that elusive Gem Mint label. There’s an entire cottage industry of group submitters and dealers who bank on finding undergraded cards and bumping them up on resubmission. It’s not far-fetched to suggest that PSA has little incentive to eliminate that lottery aspect of grading. Even PSA’s own Genamint acquisition, which introduced card fingerprinting to catch resubmissions, could be a double-edged sword – if they truly used it to reject previously-graded cards, it might dry up a steady stream of repeat orders. As one commentator wryly observed, “if TAG/AI grading truly becomes a problem [for PSA], PSA would integrate it… but for now it’s not, so we have what we get.” In other words, until the tech-savvy upstarts start eating into PSA’s market share, PSA can afford to move slowly.

There’s also the human factor of collector sentiment. A segment of the hobby simply prefers the traditional approach. The idea of a seasoned grader, someone who has handled vintage Mantles and modern Prizm rookies alike, giving their personal approval still carries weight. Some collectors worry that an algorithm might be too severe, or fail to appreciate an intangible “eye appeal” that a human might allow. PSA’s brand is built not just on plastic slabs, but on the notion that people – trusted experts – are standing behind every grade. Handing that over entirely to machines risks alienating those customers who aren’t ready to trust a computer over a well-known name. As a 2024 article on the subject noted, many in the hobby still see AI grading as lacking the “human touch” and context for certain subjective calls. It will take time for perceptions to change.

Still, these concerns feel less convincing with each passing year. New collectors entering the market (especially from the tech world) are often stunned at how low-tech the grading process remains. Slow, secretive, and expensive is how one new AI grading entrant described the incumbents – pointing to the irony that grading fees can scale up based on card value (PSA charges far more to grade a card worth $50,000 than a $50 card), a practice seen by some as a form of price-gouging. An AI-based service, by contrast, can charge a flat rate per card regardless of value, since the work and cost to the company are the same whether the card is cheap or ultra-valuable. These startups argue they have no conflicts of interest – the algorithm doesn’t know or care what card it’s grading, removing any unconscious bias or temptation to cut corners for high-end clients. In short, technology promises an objective fairness that the current system can’t match.

Upstart Efforts: Tech Takes on the Titans

In the past few years, a number of new grading companies have popped up promising to disrupt the market with technology. Hybrid Grading Approach (HGA) made a splash in 2021 by advertising a “hybrid” model: cards would be initially graded by an AI-driven scanner, then verified by two human graders. HGA also offered flashy custom labels and quicker turnaround times. For a moment, it looked like a strong challenger, but HGA’s momentum stalled amid reports of inconsistent grades and operational missteps (underscoring that fancy tech still needs solid execution behind it).

TAG Grading, mentioned earlier, took a more hardcore tech route – fully computerized grading with proprietary methods and a plethora of data provided to the customer. TAG’s system, however, launched with limitations: initially they would only grade modern cards (1989-present) and standard card sizes, likely because their imaging system needed retraining or reconfiguration for vintage cards, thicker patch cards, die-cuts, etc. This highlights a challenge for any AI approach: it must handle the vast variety of cards in the hobby, from glossy Chrome finish to vintage cardboard, and even odd-shaped or acetates. TAG chose to roll out methodically within its comfort zone. The result has been rave reviews from a small niche – those who tried TAG often praise the “transparent grading report” showing every flaw – but TAG remains a tiny player. Despite delivering what many consider a better mousetrap, they have not come close to denting PSA’s dominance.

Arena Club, backed by a sports icon’s star power, also discovered how tough it is to crack the market. As Arena’s CFO acknowledged, “PSA is dominant, which isn’t news to anyone… it’s definitely going to be a longer road” to convince collectors. Arena pivoted to position itself not just as a grading service but a one-stop marketplace (offering vaulting, trading, even “Slab Pack” digital reveal products). In doing so, they tacitly recognized that trying to go head-to-head purely on grading technology wasn’t enough. Collectors still gravitate to PSA’s brand when it comes time to sell big cards – even if the Arena Club slab has the same card graded 10 with an AI-certified report, many buyers simply trust PSA more. By late 2024, Arena Club boasted that cards in their AI-grade slabs “have sold for almost the same prices as cards graded by PSA” , but “almost the same” implicitly concedes a gap. The market gives PSA a premium, deservedly or not.

New entrants continue to appear. Besides TAG and Arena, we’ve seen firms like AGS (Automated Grading Systems) targeting the Pokémon and TCG crowd with a fully automated “Robograding” service. AGS uses lasers and scanners to find microscopic defects “easily missed by even the best human graders,” and provides sub-scores and images of each flaw. Their pitch is that they grade 10x faster, more accurately, and cheaper – yet their footprint in the sports card realm is still small. The aforementioned Zeagley launched in mid-2025 with a flurry of press, even offering on-site instant grading demos at card shows. Time will tell if they fare any better. So far, each tech-focused upstart has either struggled to gain trust or found itself constrained to a niche, while PSA is grading more cards than ever (up 21% in volume last year ) and even raising prices for premium services. In effect, the incumbents have been able to watch these challengers from a position of strength and learn from their mistakes.

PSA: Bigger Than Ever, But Is It Better?

It’s worth noting that PSA hasn’t been entirely tech-averse. They use advanced scanners at intake, have implemented card fingerprinting and alteration-detection algorithms (courtesy of Genamint) behind the scenes, and likely use software to assist with centering measurements. Nat Turner, who leads PSA’s parent company, is a tech entrepreneur himself and clearly sees the long-term importance of innovation. But from an outsider’s perspective, PSA’s grading process in 2025 doesn’t look dramatically different to customers than it did a decade ago: you send your cards in, human graders assign a 1–10 grade, and you get back a slab with no explanation whatsoever of why your card got the grade it did. If you want more info, you have to pay for a higher service tier and even then you might only get cursory notes. This opacity is increasingly hard to justify when competitors are providing full digital reports by default. PSA’s stance seems to be that its decades of experience are the secret sauce – that their graders’ judgment cannot be fully replicated by a machine. It’s a defensible position given their success, but also a conveniently self-serving one. After all, if the emperor has ruled for this long, why acknowledge any need for a new way of doing things?

However, cracks (no pun intended) are showing in the facade. The hobby has not forgotten the controversies where human graders slipped up – like the scandal a few years ago where altered cards (trimmed or recolored) managed to get past graders and into PSA slabs, rocking the trust in the system. Those incidents suggest that even the best experts can be duped or make errors that a well-trained AI might catch via pattern recognition or measurement consistency. PSA has since leaned on technology more for fraud detection (Genamint’s ability to spot surface changes or match a card to a known altered copy is likely in play), which is commendable. But when it comes to the routine task of assigning grades, PSA still largely keeps that as an art, not a science.

To be fair, PSA (and rivals like Beckett and SGC) will argue that their human-led approach ensures a holistic assessment of each card. A grader might overlook one tiny print dot if the card is otherwise exceptional, using a bit of reasonable discretion, whereas an algorithm might deduct points rigidly. They might also argue that collectors themselves aren’t ready to accept a purely AI-driven grade, especially for high-end vintage where subtle qualities matter. There’s truth in the notion that the hobby’s premium prices often rely on perceived credibility – and right now, PSA’s brand carries more credibility than a newcomer robot grader in the eyes of many auction bidders. Thus, PSA can claim that by sticking to (and refining) their human grading process, they’re actually protecting the market’s trust and the value of everyone’s collections. In short: if it ain’t broke (for them), why fix it?

The Case for Change: Consistency, Transparency, Trust

Despite PSA’s dominance, the case for an AI-driven shakeup in grading grows stronger by the day. The hobby would benefit enormously from grading that is consistent, repeatable, and explainable. Imagine a world where you could submit the same card to a grading service twice and get the exact same grade, with a report detailing the precise reasons. That consistency would remove the agonizing second-guessing (“Should I crack this 9 and try again?”) and refocus everyone on the card itself rather than the grading lottery. It would also level the playing field for collectors – no more wondering if a competitor got a PSA 10 because they’re a bulk dealer who “knows a guy” or just got lucky with a lenient grader. Every card, every time, held to the same standard.

Transparency is another huge win. It’s 2025 – why are we still largely in the dark about why a card got a 8 vs a 9? With AI grading, detailed digital grading reports are a natural output. Companies like TAG and Zeagley are already providing these: high-res imagery with circles or arrows pointing out each flaw, sub-scores for each category, and even interactive web views to zoom in on problem areas. Not only do these reports educate collectors on what to look for, they also keep the grading company honest. If the report says your card’s surface got an 8.5/10 due to a scratch and you, the collector, don’t see any scratch, you’d have grounds to question that grade immediately. In the current system, good luck – PSA simply doesn’t answer those questions beyond generic responses. Transparency would greatly increase trust in grading, ironically the very thing PSA prides itself on. It’s telling that one of TAG’s slogans is creating “transparency, accuracy, and consistency for every card graded.” Those principles are exactly what collectors have been craving.

Then there’s the benefit of speed and efficiency. AI grading systems can process cards much faster than humans. A machine can work 24/7, doesn’t need coffee breaks, and can ramp up throughput just by adding servers or scanners (whereas PSA had to physically expand to a new 130,000 sq ft facility and hire dozens of new graders to increase capacity ). Faster grading means shorter turnaround times and fewer backlogs. During the pandemic, we saw how a huge backlog can virtually paralyze the hobby’s lower end – people stopped sending cheaper cards because they might not see them back for a year. If AI were fully deployed, the concept of a months-long queue could vanish. Companies like AGS brag about “grading 10,000 cards in a day” with automation; even if that’s optimistic, there’s no doubt an algorithm can scale far beyond what manual grading ever could.

Lastly, consider cost. A more efficient grading process should eventually reduce costs for both the company and the consumer. Some of the new AI graders are already undercutting on price – e.g. Zeagley offering grading at $9.99 a card for a 15-day service – whereas PSA’s list price for its economy tier floats around $19–$25 (and much more for high-value or faster service). Granted, PSA has the brand power to charge a premium, but in a competitive market a fully automated solution should be cheaper to operate per card. That savings can be passed on, which encourages more participation in grading across all value levels.

The ChatGPT Experiment: DIY Grading with AI

Perhaps the clearest proof that card grading is ripe for automation is that even hobbyists at home can now leverage AI to grade their cards in a crude way. Incredibly, thanks to advances in AI like OpenAI’s ChatGPT, a collector can snap high-resolution photos of a card (front and back), feed them into an AI model, and ask for a grading opinion. Some early adopters have done just that. One collector shared that he’s “been using ChatGPT to help hypothetically grade cards” – he uploads pictures and asks, “How does the centering look? What might this card grade on PSA’s scale?” The result? “Since I’ve started doing this, I have not received a grade lower than a 9” on the cards he chose to submit. In other words, the AI’s assessment lined up with PSA’s outcomes well enough that it saved him from sending in any card that would grade less than mint. It’s a crude use of a general AI chatbot, yet it highlights something powerful: even consumer AI can approximate grading if given the standards and some images.

Right now, examples like this are more curiosities than commonplace. Very few collectors are actually using ChatGPT or similar tools to pre-grade on a regular basis. But it’s eye-opening that it’s even possible. As image recognition AI improves and becomes more accessible, one can imagine a near-future app where you scan your card with your phone and get an instantaneous grade estimate, complete with highlighted flaws. In fact, some apps and APIs already claim to do this for pre-grading purposes. It’s not hard to imagine a scenario where collectors start publicly verifying or challenging grades using independent AI tools – “Look, here’s what an unbiased AI thinks of my card versus what PSA gave it.” If those two views diverge often enough, it could pressure grading companies to be more transparent or consistent. At the very least, it empowers collectors with more information about their own cards’ condition.

Embracing the Future: It’s Time for Change

The sports card grading industry finds itself at a crossroads between tradition and technology. PSA is king – and by many metrics, doing better than ever in terms of business – but that doesn’t mean the system is perfect or cannot be improved. Relying purely on human judgment in 2025, when AI vision systems are extraordinarily capable, feels increasingly antiquated. The hobby deserves grading that is as precise and passion-driven as the collectors themselves. Adopting AI for consistent and repeatable standards should be an easy call: it would eliminate so many pain points (inconsistency, long waits, lack of feedback) that collectors grumble about today.

Implementing AI doesn’t have to mean ousting the human experts entirely. A hybrid model could offer the best of both worlds – AI for objectivity and humans for oversight. For example, AI could handle the initial inspection, quantifying centering to the decimal and finding every tiny scratch, then a human grader could review the findings, handle any truly subjective nuances (like eye appeal or print quality issues that aren’t easily quantified), and confirm the final grade. The human becomes more of a quality control manager rather than the sole arbiter. This would massively speed up the process and tighten consistency, while still keeping a human in the loop to satisfy those who want that assurance. Over time, as the AI’s track record builds trust, the balance could shift further toward full automation.

Ultimately, the adoption of AI in grading is not about devaluing human expertise – it’s about capturing that expertise in a reproducible way. The best graders have an eye for detail; the goal of AI is to have 1000 “eyes” for detail and never blink. Consistency is king in any grading or authentication field. Imagine if two different coin grading experts could look at the same coin and one says “MS-65” and the other “MS-67” – coin collectors would be up in arms. And yet, in cards we often tolerate that variability as normal. We shouldn’t. Cards may differ subtly in how they’re produced (vintage cards often have rough cuts that a computer might flag as edge damage, for instance), so it’s important to train the AI on those nuances. But once trained, a machine will apply the standard exactly, every single time. That level of fairness and predictability would enhance the hobby’s integrity.

It might take more time – and perhaps a serious competitive threat – for the giants like PSA to fully embrace an AI-driven model. But the winds of change are blowing. A “technological revolution in grading” is coming; one day we’ll look back and wonder how we ever trusted the old legacy process, as one tech expert quipped. The smarter companies will lead that revolution rather than resist it. Collectors, too, should welcome the change: an AI shakeup would make grading more of a science and less of a gamble. When you submit a card, you should be confident the grade it gets is the grade it deserves, not the grade someone felt like giving it that day. Consistency. Transparency. Objectivity. These shouldn’t be revolutionary concepts, but in the current state of sports card grading, they absolutely are.

The sports card hobby has always been a blend of nostalgia and innovation. We love our cardboard heroes from the past, but we’ve also embraced new-age online marketplaces, digital card breaks, and blockchain authentication. It’s time the critical step of grading catches up, too. Whether through an industry leader finally rolling out true AI grading, or an upstart proving its mettle and forcing change, collectors are poised to benefit. The technology is here, the need is obvious, and the hobby’s future will be brighter when every slabbed card comes with both a grade we can trust and the data to back it up. The sooner we get there, the better for everyone who loves this game.